Artificial intelligence, machine learning and why educators need to skill up now

Erica Southgate

When did you first become aware that artificial intelligence (AI) was part of your everyday world? Was it when you first used a smart phone assistant, got a product suggestion or tagged a friend on social media, or went through a facial recognition scan at passport control? Now consider when you first thought about the use of AI in education. Was it when you first saw a robot being used in class and wondered just how smart it really was, or during the NAPLAN automated essay marking controversy, or the trials to replace roll call with facial recognition technology? Perhaps, like many people, you didn’t realise or had only vaguely thought about where AI is infused into computing applications, or about how it works. In this article, I offer an introduction to AI and its subfield of machine learning (ML) and describe some of its uses in education. I also explore some important ethical and governance issues related to the technology.

Artificial intelligence (AI)

AI has been defined as:

a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy (OECD, 2019).

Although the field of AI computing has been around since the 1950s, the beginning of the 2000s saw advances in the AI-related areas of computer vision, graphics processing and speech recognition technology (Mitchell and Brynjolfsson, 2017). Access to and the tools to harvest ‘big data’ and the ability to store and manage this in the cloud combined with the growth of online communities to share code and collectively problem-solve, saw exponential innovation in AI. Big data refers to the growth, availability and use of information from a variety of sources such as the internet, sensors, and geolocation signals from devices: It is characterised by its volume, variety and velocity (or how fast it is being added to, harvested and used in real time) (Michalik, Štofa, and Zolotova, 2014).

Science fiction is replete with narratives about superintelligent machines creating dystopian futures. However, this type of AI does not exist. At present we are in an era of narrow AI (Table 1). This type of AI is able to do the single or focused task they were designed to do, sometimes with efficiency or effectiveness that can outperform humans (for example, AI-powered search engines can locate and organise vast amounts of information faster than a human could, even if it sometimes amplifies biases). There is currently no general AI. General AI would exhibit the wide-ranging intelligent and emotional characteristics associated with humans and display a ‘theory of mind’ which is an awareness of its own mental states and that of others. And, as previously stated, there is certainly not superintelligent AI like ones found in science fiction. This is a good thing because humans are currently still trying to grapple with the benefits and risks of narrow AI.

AI can be embodied in robots (although not all robots have AI) or disembodied or invisibly infused into computing programs (like search engines or map/navigation guidance apps). Often both children and adults overestimate the intelligence of AI and they are prone to anthropomorphising it (giving it human qualities) in both its robot and disembodied computer program forms (Faggella, 2018). Humans are often over-trusting of robots even if the robot makes obvious errors. For example, in one experiment humans followed a robot's directions during a simulated but realistic fire emergency even when it was obvious that the robot was making mistakes in guiding them (Wagner, Borenstein and Howard, 2018).

Table 1: Types of Artificial Intelligence from Southgate et al. (2018) adapted from Hintz (2016).

|

Superintelligence |

AI that exceeds human intelligence in every field (sometimes called the singularity). Example: None as this type of AI does not exist. |

|

|

General AI |

Self Awareness Example: None as this type of AI does not currently exist |

AI at this level would extend the ‘theory of mind’ to predict the internal states of others. Having achieved human-like consciousness, it might choose to exhibit non-human abilities. |

|

Theory of Mind Example: None as this type of AI does not currently exist |

This type of AI would have an updatable representation of the world that includes an understanding that other entities in the world also have their own internal states. |

|

|

Narrow AI |

Limited Memory AI Example: Virtual assistants, self-driving cars |

This type of AI receives current input and adds pieces of this input to its programmed representation of the world. This can change the way the AI makes current or new decisions. |

|

Reactive AI Example: AI chess player |

Designed for a specific task, this AI receives input, and acts on this input. They cannot be applied to different tasks, and past experiences do not affect current decisions. |

|

Machine learning (ML)

Today, talk of AI invariably involves the subfield of machine learning (ML). Maini and Sabri, (2017) define ML as:

(A) subfield of artificial intelligence. Its goal is to enable computers to learn on their own. A machine’s learning algorithm enables it to identify patterns in observed data, build models that explain the world, and predict things without having explicit pre-programmed rules and models (p.9).

The field of ML involves getting algorithms to learn through experience (an algorithm is instructions that tell the computer or machine how to achieve an operation). Computing systems with ML learn as they receive data but do not need to be explicitly programmed to do this.

There are different types of ML. Some examples include:

- Supervised learning: Qualified people label or classify initial input data to train an algorithmic model to identify patterns and make predications when new data is given to it. The algorithm learns from experience that is guided by a human labelling the data.

- Unsupervised learning: In this type of ML, algorithms create their own structure (features) that can be used to detect patterns and classifications in unlabelled data. Unsupervised learning is used to explore and detect patterns when an outcome is unknown or not predetermined. It is possible that with large enough data sets, unsupervised learning algorithms would identify patterns in behaviour or other phenomena that were previously unknown.

- Reinforcement learning: This has an algorithm interacting with a specific environment to find the best outcome through trial and error without training: ‘The machine is trained to make specific decisions. … (It) learns from past experience and tries to capture the best possible knowledge to make accurate … decisions’ (Ramzai, 2020).

- Deep learning: Associated with artificial neural networks (ANN). This type of ML is inspired by the way neurons connect in the human brain. It has numerous layers of algorithms that interact to model data and make inferences. There are multiple ANNs at lower levels of abstraction to effectively solve chunks of a problem and provide these partial solutions to ANNs at higher levels to derive a larger solution (LeCun, Bengio and Hinton, 2015). ANNs are ‘organized into layers of nodes, and they’re ‘feed-forward,” meaning that data moves through them in only one direction (so that an) individual node might be connected to several nodes in the layer beneath it, from which it receives data, and several nodes in the layer above it, to which it sends data’ (Hardesty, 2017). Deep learning is being used to understand complex data such as natural language processing which involves complicated vocabularies or machine vision processing that has intricate pixel information (Maini and Sabri, 2017).

Developing a basic understanding of ML is important because: knowledge about algorithmic processes help demystify the technology; it empowers us to better identify when AI is present and may be intervening in our lives through automated ‘nudging’ based on machine predictions and classifications; and, it allow us to proactively ask serious questions about the predictions and classifications generated by machines and the potential for bias, error and discrimination. To elaborate on this last point:

Amazon stopped using a hiring algorithm after finding it favoured applicants based on words like “executed” or “captured” that were more commonly found on men’s resumes. … Another source of bias is flawed data sampling, in which groups are over- or underrepresented in the training data. For example, Joy Buolamwini at MIT working with Timnit Gebru found that facial analysis technologies had higher error rates for minorities and particularly minority women, potentially due to unrepresentative training data (Manyika, Silberg and Presten, 2019].

One of the key issues is the often ‘black box’ nature of ML. This means that the algorithmic process between inputs and outputs is not transparent because the algorithms: (1) are proprietary (the property of companies and governments who will not open these for independent review); or (2) so complex in their operation, like ANNs, that the machine’s decision-making processes are not wholly explainable even to the scientists who develop the systems (Campolo, Sanfilippo, Whittaker and Crawford, 2017). ‘Black box’ AI has limited transparency (and therefore limited contestability and can materially affect the options people are given and their life opportunities — it can determine if someone gets a job interview or a loan, which welfare recipients get a debtor’s notice, which learners get categorised as ‘at risk’ of attrition or failure, or who has access to a particular curriculum pathway in an intelligent tutoring system.

AI and education

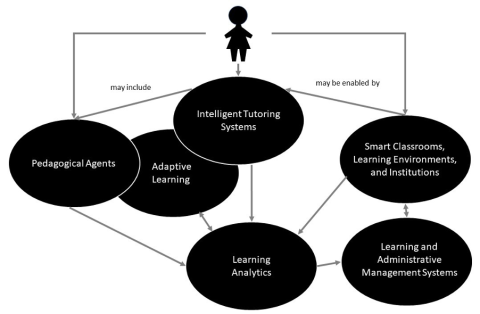

AI is present in many applications that are used in the classroom (for example Photoshop). There is also a field of research called AI in Education (AIED) which has been around since the 1970s. This field is interested in developing systems that can automate routine teaching tasks and facilitate learning with intelligent (adaptive) tutoring systems, pedagogical agents in learning applications (characters that can help learners), and robomarking (for an overview see Luckin, Holmes, Griffiths, and Forcier, 2016). AI plays an important role in generating data analytics and in mining data for insights. There is also a vision of educational institutions as ‘smart’ environments that are part of the Internet of Things (IoT) where sensors in the environment and data from official and personal devices is harvested so that efficiencies can be delivered: This is really only a future vision at present although there continues to be great interest in the harvesting of geolocation and biometric data (data from people’s bodies such as facial recognition, pupil dilation and gaze pattern) in educational contexts. Figure 1 provides an overview of where AI may be present in educational computing systems.

Figure 1. Overview of typical AIED applications and their relationship to each other (adapted from Southgate et al. (2018).

A recent review of AIED in higher education (universities are the main driver and incubator of the technology for education) found that there was generally inadequate theoretical connection to pedagogical theory and perspectives, limited critical reflection of challenges and risks of AIED, and a need for more research on the ethical implications of using the technology in education (Zawacki-Richter, Marín, Bond and Gouverneur, 2019).

What are some of the ethical and governance issues to consider?

AI with ML may yield benefits for education in terms of both administration and learning, and it is fair to say that not all AI-powered applications represent the same level of ethical risk. It is arguably the case that advances in AI have outstripped legal and regulatory oversight. This means that educators must lead the way in asking ethical questions about the technology. Elsewhere I have provided a practical framework for educators to ask ethical questions about the design, implementation and governance of AI in relation to five ethical pillars which I will touch on here:

1. Awareness: We need to develop foundational knowledge about the technology to raise awareness of what it is, where it is present and what it is doing, how it might be used for good. and when it should never be used. Many people ‘are not aware of the multiplicity of agents and algorithms currently gathering and storing their data for future use’ (boyd and Crawford, 2012, p.673). Educators, students and parents and care-givers should be made fully aware of AI data harvesting, storage and third party and other sharing arrangements with a focus on strong informed opt-in consent obtained. Promoting awareness of AI with informed consent will provide some protection from deception and allow all stakeholders an opportunity to be involved in deciding the role and parameters of the technology in education. Approaches to raising awareness need to take into account groups who may have lower literacy and digital literacy skills.

2. Explainability: One of the foundational principles of education is explainability. This is the capacity of, and commitment by, educators and those leading and operating educational institutions to explain — with proficiency, clarity and transparency — pedagogical and administrative processes and decisions and be held responsible for the impact of these. Similar to awareness, this is a pedagogical project which seeks to provide all stakeholders with genuine, consultative and public opportunities to ask questions about applications of technology in school systems and have these questions responded to in an honest, intelligible (plain English) and timely way. This also involves educators holding manufacturers, vendors and procurers of AI technology responsible for explaining:

- how the application upholds human rights;

- what the technology should do, can and can’t do;

- the educational and societal values and norms on which it was/is trained and acts;

- the learning and pedagogical theory and domain knowledge on which it is based;

- evidence of its efficacy for learning for diverse groups of students;

- arrangements for data collection, deidentification, storage and use including third party or other sharing agreements, and those for sensitive information such as biometrics or measures embedded in affective computing applications;

- if algorithmic ‘nudging’ is part of the system, how it complies with ethical principles; and

- full, timely disclosure of potential or actual benefits and risks, and any harm that may result from a system.

3. Fairness: Fairness is used in several ways in AI ethics. The first relates to the potential social inequality that AI is forecast to generate over the coming decades with structural economic shifts due to automation. The second relates to the potential benefits of interacting with AI being fairly distributed and the burdens of experimental use being minimised. The other area, and one that has garnered a lot of interest, involves AI bias. There are many publicised cases of AI bias with sexism, racism and other forms of discrimination occurring (Campolo et al. 2017). When AI-powered systems predict outcomes for, or categorise individuals or groups, they subtlety and overtly influence how we understand those people, and this can lead to discrimination and stigma even when humans are in an automated decision-making loop. Campolo et al. (2017) recommend standards be established to track the provenance, development, and use of training datasets throughout their lifecycle in order to better understand, monitor and respond to issues of bias and representational skews. In addition, Wachter and colleagues (2020) elaborate on the challenges AI presents in terms of new forms of discrimination including the possibility that the technology will generate new forms of discrimination not immediately discernible to humans:

Compared to human decision-making, algorithms are not similarly intuitive; they operate at speeds, scale and levels of complexity that defy human understanding, group and act upon classes of people that need not resemble historically protected groups, and do so without potential victims ever being aware of the scope and effects of automated decision-making. As a result, individuals may never be aware they have been disadvantaged and thus lack a starting point to raise a claim under non-discrimination law (Wachter, Mittelstadt and Russell, 2020, p.6).

4. Transparency: An ‘important underlying principle is that it should always be possible to find out why an autonomous system made a particular decision (most especially if that decision has caused harm)’ (Winfield and Jirotka, 2017, p.5). AI is often described as an opaque technology. It is commonly invisibly infused into computing systems in ways that can influence our interactions, options, decisions, moods and sense of self without us being aware of this (Cowrie, 2015). Furthermore, the proprietary status of the data sets used to train AI and its algorithms hinder scrutiny from independent experts. Customers must rely on industry assurances that adequate checks have been carried out regarding privacy implications for the type of personal data being harvested and shared, and that the potential risks of algorithmic bias have been addressed. Relatedly, industry can have a legal obligation to protect data, making full disclosure problematic if bias or other harm does occur (boyd, 2016). Another reason AI can be considered opaque relates to the ‘black box’ nature of some types of ML particularly deep learning. Some researchers suggest that black box ML should not be used in ‘safety critical systems’ where classification, predictions and decisions made by AIs can have serious consequences for human safety or wellbeing (Winfield and Jirotka, 2018), and this includes the realm of education. Technologists have suggested technical ways in which AI systems can be made transparent (IEEE, 2019), especially in relation to how a system interprets and implements norms that influence decisions made by the machine. However, this area remains contentious with continuing ethical and technical debate around how to ensure transparency.

5. Accountability: Governance of AI will entail new ways of thinking about the interconnections and tensions between proprietary interests, public and transparent auditability, regulatory standards, policy and risk assessment, legal obligations, and broader social, cultural and economic responsibilities. Accountability in an AI world is an exceedingly complicated area:

(T)he complexity of (autonomous and intelligent) technology and the non-intuitive way in which it may operate will make it difficult for users of those systems to understand the actions of the (system) that they use, or with which they interact. This opacity, combined with the often distributed manner in which the (automated and intelligent systems) are developed, will complicate efforts to determine and allocate responsibility when something goes wrong. Thus, lack of transparency increases the risk and magnitude of harm when users do not understand the systems they are using, or there is a failure to fix faults and improve systems following accidents. Lack of transparency also increases the difficulty of ensuring accountability. (IEEE, 2019, p.27).

Regulation and standards that clearly identify the types of operations and decisions that should not be delegated to AI are slowly being formulated. Both manufacturers of, and those procuring, AI systems need to have policies that address algorithmic maintenance, pre-conditions for effective use, and supply training for those implementing the systems. The IEEE (2019) suggest that algorithmic maintenance needs due diligence and enough investment in relation to monitoring outcomes, complaints, inspection and replacement of harmful algorithms, and that delegating responsibility to end-users for this is not appropriate. Gulson and colleagues (2018) provide a sensible set of recommendations including: developing procurement guidelines that encourage ethical, transparent design of AI; reviewing international data protection legislation to develop a suitable approach for Australian education; and establishing official guidelines for adaptive and personalised learning systems that ensure learning efficacy and equity.

Importantly, governance structures must have accessible contestability mechanisms for students, staff and parents and care-givers that include access to independent expert technical and ethical advice so that potential bias and other harms might be identified and responded to earlier rather than later. There are many interconnected issues including: surveillance; algorithmic bias and discrimination; data privacy, consent and sharing arrangements; the growth and scale of integrated biometric and geolocation harvesting through administrative and learning applications; function creep (using data for purposes not originally intended); and the security of personal data and its potential for reidentification. These all raise very serious ethical issues that need constant attention within transparent systems of governance that include processes that allow for open dialogue between stakeholders within school communities and processes of contestability.

Concluding remarks

As educators we must become actively involved in asking ethical questions about computing systems that can automate or profoundly affect decision making, and whose predicative and classificatory functions can have impacts, positive and negative, on how students and teachers are viewed and treated. We are fortunate in that the integration of AI into schooling systems is just arriving – we have a window of opportunity to skill up on technical, pedagogical and ethical matters related to the technology. While there has been some discussion about the de-skilling of teachers through automation, we must turn more attention to collectively asking questions about the computing systems introduced into schools, the data that is harvested through them, its use and sharing arrangements, and the effects this has on teachers and students. The introduction of automated and intelligent systems which influence decisions and make predictions and classifications about humans should only occur with caution and as part of a broader pedagogical and ethical project that empowers school communities to make informed choices about the design, implementation and governance of AI in schools.

Postscript: I am currently conducting research on the ethics of AI in education and am looking for teachers, school leaders and policy makers to interview. If you are interested in finding out more please email me – [email protected]

References

boyd, D. (2016). "Transparency! = Accountability". Address to EU Parliament Event 07/11/16 on Algorithmic Accountability and Transparency. Brussels, Belgium.

boyd, D., & Crawford, K. (2012). Critical Questions for Big Data: Provocations for a Cultural, Technological, and Scholarly Phenomenon. Information, Communication & Society, 15(5), 662–679.

Campolo, A., Sanfilippo, M., Whittaker, M., & Crawford, K. (2017). AI Now 2017 Report. New York: AI Now Institute. Retrieved https://ainowinstitute.org/

Cowie, R. (2015). Ethical Issues in Affective Computing. In R. A. Calvo, S. D'Mello, & J. Gratch, J. (Eds.), The Oxford Handbook of Affective Computing (334–348), New York: Oxford University Press.

Faggella, D. (2018). What is Machine Learning? Retrieved https://emerj.com/ai-glossary-terms/what-is-machine-learning/

Gulson, K. N., Murphie, A., Taylor, S., & Sellar, S. (2018). Education, Work and Australian Society in an AI World. A Review of Research Literature and Policy Recommendations. Sydney: Gonski Institute for Education, UNSW.

Hardesty, L. (2017) Explained: Neural Networks. Retrieved http://news.mit.edu/2017/explained-neural-networks-deep-learning-0414

Hintze, A. (2016, Nov 14). Understanding the four types of AI, from reactive robots to self-aware beings. The Conversation. Retrieved https://theconversation.com/understanding-the-four-types-of-ai-from-reactive-robots-to-self-aware-beings-67616

IEEE. (2019). Ethically aligned design. A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. Retrieved https://standards.ieee.org/content/dam/ieee-standards/standards/web/documents/other/ead1e.pdf

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep Learning. Nature, 521(7553), 436.

Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2016). Intelligence Unleashed: An Argument for AI in Education. London: Pearson.

Maini, V., & Sabri, S. (2017). Machine Learning for Humans. Retrieved from https://everythingcomputerscience.com/books/Machine%20Learning%20for%20Humans.pdf

Manyika, J., Silberg, J. and Presten, B. (2019). What do we do about the Biases in AI? Retrieved https://hbr.org/2019/10/what-do-we-do-about-the-biases-in-ai

Michalik, P., Štofa, J., & Zolotova, I. (2014). Concept Definition for Big Data Architecture in the Education System. In 2014 IEEE 12th International Symposium on Applied Machine Intelligence and Informatics (SAMI) (pp. 331–334). IEEE.

Mitchell, T., & Brynjolfsson, E. (2017). Track how Technology is Transforming Work. Nature News, 544(7650), 290. Retrieved from https://www.nature.com/news/track-how-technology-is-transforming-work-1.21837

OECD (2019). Recommendation of the Council on Artificial Intelligence. OECD Legal Instruments. Retrieved https://legalinstruments.oecd.org/en/instruments/OECD-LEGAL-0449

Ramzai, J. (2020). Clearly Explained: 4 Types of Machine Learning Algorithms. Retrieved https://towardsdatascience.com/clearly-explained-4-types-of-machine-learning-algorithms-71304380c59a

Southgate, E., Blackmore, K., Pieschl, S., Grimes, S., McGuire, J. & Smithers, K. (2018). Artificial Intelligence and Emerging Technologies (Virtual, Augmented and Mixed Reality) in Schools: A Research Report. Commissioned by the Australian Government. Retrieved

Wagner, A. R., Borenstein, J., & Howard, A. (2018). Overtrust in the robotic age. Communications of the ACM, 61(9), 22-24. Retrieved https://cacm.acm.org/magazines/2018/9/230593-overtrust-in-the-robotic-age/fulltext

Wachter, S., Mittelstadt, B., & Russell, C. (2020). Why Fairness Cannot be Automated: Bridging the Gap between EU Non-discrimination Law and AI. Available at SSRN.

Winfield, A. F., & Jirotka, M. (2017). The Case for an Ethical Black Box. In Conference Towards Autonomous Robotic Systems (pp. 262–273). Cham, Switzerland: Springer.

Winfield, A. F., & Jirotka, M. (2018). Ethical Governance is Essential to Building Trust in Robotics and Artificial Intelligence Systems. Philosophical Transactions of the Royal Society A-Mathematical Physical and Engineering Sciences, 376(2133), 1–13.

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic Review of Research on Artificial Intelligence Applications in Higher Education–Where are the Educators?. International Journal of Educational Technology in Higher Education, 16(1), 1–27.

Erica Southgate is Associate Professor of Emerging Technologies for Education at the University of Newcastle, Australia. She is Lead Researcher of the VR School Study and a maker of computer games for literacy learning. She was a 2016 National Equity Fellow and 2017 Innovation Award Winner for the Australasian Society for Computers in Learning in Tertiary Education (ASCILITE). She co-authored the research report, ‘Artificial Intelligence and Emerging Technologies in Schools’ and has free infographic classroom posters on AI for primary and secondary students available here. For more on Erica’s research see https://ericasouthgateonline.wordpress.com/projects/.

This article appears in Professional Voice 13.2 Learning in the shadow of the pandemic.